CMSFlow Matrix Solver: Difference between revisions

No edit summary |

mNo edit summary |

||

| Line 1: | Line 1: | ||

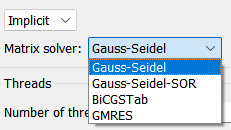

The selection of an iterative solver is one key issue concerning the overall | The selection of an iterative solver is one key issue concerning the overall performance of the model. CMS has three iteration solvers available, and they are described in more detail below: 1) GMRES variation, 2) BiCGStab, and 3) Gauss-Seidel. The default iterative solver for CMS is a variation of the GMRES (Generalized Minimum Residual) method (Saad 1993) and is used to solve the algebraic equations. | ||

[[File:CMSFlow Model Parameters GeneralTab Matrix Solver V13.2.12.png|500px|thumb|right|CMS Flow General Tab Matrix Solvers ]] | [[File:CMSFlow Model Parameters GeneralTab Matrix Solver V13.2.12.png|500px|thumb|right|CMS Flow General Tab Matrix Solvers ]] | ||

=Gauss- | =Gauss-Seidel= | ||

The simplest iterative solver implemented in CMS is the point-implict Gauss-Seidel solver. This method may be applied in CMS with or without | The simplest iterative solver implemented in CMS is the point-implict Gauss-Seidel solver. This method may be applied in CMS with or without Successive-Over-Relaxation to speed-up convergence (Patankar 1980). Even though the Gauss-Seidel method requires more iterations for convergence, the overall efficiency may be higher than the GMRES and BiCGStab because each iteration is computationally inexpensive and the code is parallelized. However, the GMRES and BiCGStab methods are more robust and perform better for large time-steps. | ||

=Gauss- | =Gauss-Seidel SOR= | ||

The simplest of the solvers, is the point-implict Gauss-Seidel | The simplest of the solvers, is the point-implict Gauss-Seidel solver which can be applied in CMS with or without Successive-Over-Relaxation to speed up convergence (Patankar 1980). Unlike the GMRES and BiCGStab, the Gauss-Seidel is highly parallelized. Even though the Gauss-Seidel requires more iterations to converge, the overall efficiency can be much higher than the GMRES and BiCGStab, but because each iteration is so inexpensive and the parallelization. However, the GMRES and BiCGStab are more robust and perform better for stiff problems. | ||

=BiCStab= | =BiCStab= | ||

The BiCGStab (BiConjugate Gradient Stabilized) iterative solver is also a Krylov subspace solver and is applicable to symmetric and non- | The BiCGStab (BiConjugate Gradient Stabilized) iterative solver is also a Krylov subspace solver and is applicable to symmetric and non-symmetric matrices (Saad 1996). BiCGStab also uses ILUT as a preconditioner (Saad 1994). The BiCGStab method can be viewed as a combination of the standard Biconjugate Gradient solver where each iterative step is followed by a restarted GMRES iterative step. One advantage of the BiCGStab iterative solver is that the memory requirements are constant for each iteration and there are less computational costs when compared to the GMRES method (for less than four iterations). For large problems BiCGStab scales better than the GMRES. | ||

=GMRES= | =GMRES= | ||

The default solver is a | The default solver is a variant of the GMRES (Generalized Minimum Residual) method (Saad, 1993) to solve the algebraic equations. The original GMRES method (Saad and Schultz, 1986) uses the Arnoldi process to reduce the coefficient matrix to the Hessenburg form and minimizes at every step the norm of the residual vector over a Krylov subspace. The variant of the GMRES method recommended by Saad (1993) allows changes in the preconditioning at every iteration step. An ILUT (Incomplete LU Factorization; Saad, 1994) is used as the preconditioner to speed up the convergence. The GMRES solver is applicable to symmetric and non-symmetric matrices and leads to the smallest residual for a fixed number of iterations. However, the memory requirements and computational costs become increasingly expensive for large systems. | ||

The original GMRES method (Saad and Schultz 1986) utilizes the Arnoldi process to reduce the coefficient matrix to the Hessenburg form and minimizes the norm of the residual vector over a Krylov subspace at each iterative step. The variation of the GMRES method recommended by Saad (1993) allows changes in preconditioning at every iteration step. An Incomplete LU Factorization (ILUT; Saad, 1994) is used as the preconditioner to speed-up convergence. The GMRES solver is applicable to symmetric and non-symmetric matrices and leads to the smallest residual for a fixed number of iterations. However, the memory requirements and computational costs become increasingly expensive for larger systems. | The original GMRES method (Saad and Schultz 1986) utilizes the Arnoldi process to reduce the coefficient matrix to the Hessenburg form and minimizes the norm of the residual vector over a Krylov subspace at each iterative step. The variation of the GMRES method recommended by Saad (1993) allows changes in preconditioning at every iteration step. An Incomplete LU Factorization (ILUT; Saad, 1994) is used as the preconditioner to speed-up convergence. The GMRES solver is applicable to symmetric and non-symmetric matrices and leads to the smallest residual for a fixed number of iterations. However, the memory requirements and computational costs become increasingly expensive for larger systems. | ||

Revision as of 17:22, 20 December 2022

The selection of an iterative solver is one key issue concerning the overall performance of the model. CMS has three iteration solvers available, and they are described in more detail below: 1) GMRES variation, 2) BiCGStab, and 3) Gauss-Seidel. The default iterative solver for CMS is a variation of the GMRES (Generalized Minimum Residual) method (Saad 1993) and is used to solve the algebraic equations.

Gauss-Seidel

The simplest iterative solver implemented in CMS is the point-implict Gauss-Seidel solver. This method may be applied in CMS with or without Successive-Over-Relaxation to speed-up convergence (Patankar 1980). Even though the Gauss-Seidel method requires more iterations for convergence, the overall efficiency may be higher than the GMRES and BiCGStab because each iteration is computationally inexpensive and the code is parallelized. However, the GMRES and BiCGStab methods are more robust and perform better for large time-steps.

Gauss-Seidel SOR

The simplest of the solvers, is the point-implict Gauss-Seidel solver which can be applied in CMS with or without Successive-Over-Relaxation to speed up convergence (Patankar 1980). Unlike the GMRES and BiCGStab, the Gauss-Seidel is highly parallelized. Even though the Gauss-Seidel requires more iterations to converge, the overall efficiency can be much higher than the GMRES and BiCGStab, but because each iteration is so inexpensive and the parallelization. However, the GMRES and BiCGStab are more robust and perform better for stiff problems.

BiCStab

The BiCGStab (BiConjugate Gradient Stabilized) iterative solver is also a Krylov subspace solver and is applicable to symmetric and non-symmetric matrices (Saad 1996). BiCGStab also uses ILUT as a preconditioner (Saad 1994). The BiCGStab method can be viewed as a combination of the standard Biconjugate Gradient solver where each iterative step is followed by a restarted GMRES iterative step. One advantage of the BiCGStab iterative solver is that the memory requirements are constant for each iteration and there are less computational costs when compared to the GMRES method (for less than four iterations). For large problems BiCGStab scales better than the GMRES.

GMRES

The default solver is a variant of the GMRES (Generalized Minimum Residual) method (Saad, 1993) to solve the algebraic equations. The original GMRES method (Saad and Schultz, 1986) uses the Arnoldi process to reduce the coefficient matrix to the Hessenburg form and minimizes at every step the norm of the residual vector over a Krylov subspace. The variant of the GMRES method recommended by Saad (1993) allows changes in the preconditioning at every iteration step. An ILUT (Incomplete LU Factorization; Saad, 1994) is used as the preconditioner to speed up the convergence. The GMRES solver is applicable to symmetric and non-symmetric matrices and leads to the smallest residual for a fixed number of iterations. However, the memory requirements and computational costs become increasingly expensive for large systems.

The original GMRES method (Saad and Schultz 1986) utilizes the Arnoldi process to reduce the coefficient matrix to the Hessenburg form and minimizes the norm of the residual vector over a Krylov subspace at each iterative step. The variation of the GMRES method recommended by Saad (1993) allows changes in preconditioning at every iteration step. An Incomplete LU Factorization (ILUT; Saad, 1994) is used as the preconditioner to speed-up convergence. The GMRES solver is applicable to symmetric and non-symmetric matrices and leads to the smallest residual for a fixed number of iterations. However, the memory requirements and computational costs become increasingly expensive for larger systems.

Reference: CMS Flow Numerical Models